Background

You’ve likely encountered this scenario: you’ve calculated an estimate for a particular parameter, and now you require a confidence interval. Seems straightforward, doesn’t it? However, the task becomes considerably more challenging if your estimator is a nonlinear function of other random variables. Whether you’re dealing with ratios, transformations, or intricate functional relationships, directly deriving the variance for your estimator can feel incredibly daunting. In some instances, the bootstrap might offer a solution, but it can also be computationally demanding.

Enter the Delta Method, a technique that harnesses the power of Taylor series approximations to assist in calculating confidence intervals within complex scenarios. By linearizing a function of random variables around their mean, the Delta Method provides a way to approximate their variance (and consequently, confidence intervals). This effectively transforms a convoluted problem into a more manageable one. Let’s delve deeper together, assuming you already have a foundational understanding of hypothesis testing.

Notation

Before diving into the technical weeds, let’s set up some notation to keep things grounded. Let ![]() be a random vector of dimension

be a random vector of dimension ![]() , with mean vector

, with mean vector ![]() and covariance matrix

and covariance matrix ![]() (or simply a scalar

(or simply a scalar ![]() when

when ![]() ). Suppose you have a continuous, differentiable function

). Suppose you have a continuous, differentiable function ![]() , and you’re interested in approximating the variance of

, and you’re interested in approximating the variance of ![]() , denoted as

, denoted as ![]() .

.

Diving Deeper

The Delta Method builds on a simple premise: for a smooth function ![]() , we can approximate

, we can approximate ![]() around its mean

around its mean ![]() using a first-order Taylor expansion:

using a first-order Taylor expansion:

![]()

where ![]() is the gradient of

is the gradient of ![]() evaluated at

evaluated at ![]() , i.e., a

, i.e., a ![]() vector of partial derivatives:

vector of partial derivatives:

![Rendered by QuickLaTeX.com \[\nabla g(\mu) = \left[ \frac{\partial g}{\partial x_1}, \frac{\partial g}{\partial x_2}, \dots, \frac{\partial g}{\partial x_k} \right]^T.\]](https://yasenov.com/wp-content/ql-cache/quicklatex.com-21df633765f094b2e5b05c641ca914b1_l3.png)

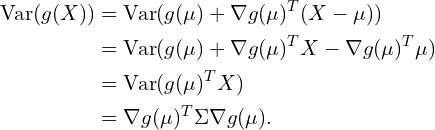

By substituting this into the approximation, the variance of ![]() becomes:

becomes:

In the univariate ![]() case, we have:

case, we have:

![]()

If ![]() is a sample-based estimator (e.g., sample mean, regression coefficients), then

is a sample-based estimator (e.g., sample mean, regression coefficients), then ![]() would be its estimated covariance matrix, and the Delta Method gives us an approximate standard error for

would be its estimated covariance matrix, and the Delta Method gives us an approximate standard error for ![]() . This approximation works well for large samples but may break down when variances are high or sample sizes are small.

. This approximation works well for large samples but may break down when variances are high or sample sizes are small.

An Example

Let’s walk through an example to make this concrete. Suppose you’re studying the ratio of two independent random variables: ![]() , where

, where ![]() and

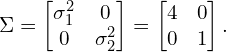

and ![]() . I know some of you want specific numbers, so we can set

. I know some of you want specific numbers, so we can set ![]() ,

, ![]() ,

, ![]() , and

, and ![]() .

.

We want to approximate the variance of ![]() using the Delta Method. Here is the step-by-step procedure to get there.

using the Delta Method. Here is the step-by-step procedure to get there.

- Define

and obtain its gradient. Here,

and obtain its gradient. Here,  and the gradient is:

and the gradient is: ![Rendered by QuickLaTeX.com \[\nabla g(\mu) = \left[ \frac{1}{\mu_2}, -\frac{\mu_1}{\mu_2^2} \right]^T.\]](https://yasenov.com/wp-content/ql-cache/quicklatex.com-19bb8bba6d590da54592503cbc2cf0f1_l3.png)

- Evaluate

at

at  and

and  . In our example

. In our example ![Rendered by QuickLaTeX.com \[\nabla g(\mu) = [0.1, -0.5]^T.\]](https://yasenov.com/wp-content/ql-cache/quicklatex.com-146c6ca66fe2a047dac959c2d38b58d3_l3.png)

- Compute the variance approximation. We have

Thus, the approximate variance of

Thus, the approximate variance of  is:

is: ![Rendered by QuickLaTeX.com \[\text{Var}(R) \approx \nabla g(\mu)^T \Sigma \nabla g(\mu) = \frac{\sigma_1^2}{\mu_2^2} + \frac{\mu_1^2 \sigma_2^2}{\mu_2^4}=\frac{4}{100}+\frac{25}{625}=0.08.\]](https://yasenov.com/wp-content/ql-cache/quicklatex.com-523cd6b9d55c26800b0a012c68cb033c_l3.png)

And that’s it. We used the Delta Method to compute the approximate variance of ![]() .

.

Bottom Line

- The Delta Method is a generic way of computing confidence intervals in non-standard situations.

- It works by linearizing nonlinear functions to approximate variances and standard errors.

- This technique works for any smooth function, making it a go-to tool in econometrics, biostatistics, and machine learning.

References

Casella, G., & Berger, R. L. (2002). Statistical Inference.

Greene, W. H. (2018). Econometric Analysis.

Leave a Reply