Background

Causal inference fundamentally seeks to answer: What is the effect of a treatment or intervention? The challenge lies in ensuring that the comparison groups—treated versus untreated—are balanced in terms of their characteristics, so differences in outcomes can be attributed solely to the intervention. This idea, often referred to as “like-with-like” or “apples-to-apples” comparison, is as simple as it is intuitive.

Balance is thus fundamental to causal inference. Weighting methods, central to achieving this balance, have evolved to include two primary types: inverse propensity score weights and covariate balancing weights. This article briefly describes these weights, their mathematical foundations, and the intuition behind them, with an example in R to bring it all together.

Notation

To set the stage, we begin by establishing the potential outcome framework and laying out the notation for the discussion:

: Potential outcomes under treatment and control.

: Potential outcomes under treatment and control. : Treatment indicator (1 for treated, 0 for untreated).

: Treatment indicator (1 for treated, 0 for untreated). : Vector of covariates (i.e., control variables).

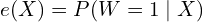

: Vector of covariates (i.e., control variables). : Propensity score, the probability of receiving treatment given covariates.

: Propensity score, the probability of receiving treatment given covariates.![Rendered by QuickLaTeX.com \mu_1=E[Y(1)]](https://yasenov.com/wp-content/ql-cache/quicklatex.com-8c6572c5af3d805d4f6fd9dcbe49cfc3_l3.png) : Mean outcome under treatment.

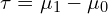

: Mean outcome under treatment. : Average treatment effect (ATE), the main object of interest.

: Average treatment effect (ATE), the main object of interest. : number of observations in the sample.

: number of observations in the sample. : number of observations in the treatment group.

: number of observations in the treatment group.

We observe a random sample of size ![]() ,

, ![]() , where

, where ![]() indexes units (e.g., individuals, firms, schools etc.). Under the assumptions of strong ignorability—unconfoundedness

indexes units (e.g., individuals, firms, schools etc.). Under the assumptions of strong ignorability—unconfoundedness ![]() and overlap (

and overlap (![]() )—the ATE can be identified and estimated.

)—the ATE can be identified and estimated.

Diving Deeper

Inverse Propensity Score Weights

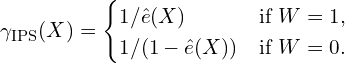

Inverse propensity score (IPS) weights rely on the estimated propensity score ![]() . The weights for treated and untreated groups are defined as:

. The weights for treated and untreated groups are defined as:

IPS weights intuitively correct for the unequal probability of treatment assignment. If an individual has a low probability of treatment ![]() but is treated, their weight is large, amplifying their influence in the analysis. Conversely, individuals with high treatment probabilities are downweighted to prevent overrepresentation.

but is treated, their weight is large, amplifying their influence in the analysis. Conversely, individuals with high treatment probabilities are downweighted to prevent overrepresentation.

A few notes. First, these weights adjust the observed data such that the distribution of covariates in the weighted treated and control groups resembles that of the overall population. This helps mitigate selection bias, ensuring that comparisons between treated and control groups reflect a treatment effect rather than confounded differences in covariates (e.g., the control group is older).

A second key property is that the weighted average of the outcomes for the treated group is an unbiased estimator for the mean outcome under treatment, ![]() . This can be expressed mathematically as:

. This can be expressed mathematically as:

![Rendered by QuickLaTeX.com \[\hat{\mu}_1(X)=\frac{\sum_i W_i Y_i/ \hat{e}(X)}{ \sum_i W_i / \hat{e}(X)}=\frac{1}{n_T}\sum_i \gamma_{\text{IPS}}(X_i) W_i Y_i.\]](https://yasenov.com/wp-content/ql-cache/quicklatex.com-684a06ccf56885204017a2bd4b9853d9_l3.png)

This equality is particularly useful in the next step, when estimating the treatment effect ![]() .

.

Third, these IPS weights are generally unknown and have to be estimated from the data. A leading exception is controlled randomized experiments where the researcher determines the probability of treatment. This is, however, uncommon. Traditionally, practitioners rely on methods like logistic regression to first obtain ![]() and then take its reciprocal to construct the weights.

and then take its reciprocal to construct the weights.

Using IPS weights entails two primary challenges. When ![]() is close to 0 or 1, weights can become excessively large, leading to high variance in estimates. Practitioners then turn to trim observations with “too large” or “too small”

is close to 0 or 1, weights can become excessively large, leading to high variance in estimates. Practitioners then turn to trim observations with “too large” or “too small” ![]() values. Moreover, model misspecification in

values. Moreover, model misspecification in ![]() can lead to poor covariate balance, introducing bias. More flexible methods such as machin learning models can alleviate this problem.

can lead to poor covariate balance, introducing bias. More flexible methods such as machin learning models can alleviate this problem.

Software Packages: MatchIt, Matching.

Covariate Balancing Weights

An alternative approach seeks weights that directly balance the dataset at hand. Typically this is framed as a constrained optimization problem with placing restrictions on the maximum imbalance in ![]() between the treatment and control groups.

between the treatment and control groups.

The weights ![]() are obtained by solving:

are obtained by solving:

![]()

where “Imbalance” measures covariate discrepancies between groups, and “Penalty” controls for extreme weights. A common formulation balances means:

![Rendered by QuickLaTeX.com \[\frac{1}{n} \sum_{i=1}^n W_i \gamma(X_i) f(X_i) \approx \frac{1}{n} \sum_{i=1}^n f(X_i),\]](https://yasenov.com/wp-content/ql-cache/quicklatex.com-5547a160fc26aed5a4c66f885900712a_l3.png)

with ![]() . The same idea can be applied to higher moments of

. The same idea can be applied to higher moments of ![]() like variance or skewness. Examples methods relying on this type of weights include include Hainmueller (2012), Chan et al. (2016), and Athey et al. (2018), among many others.

like variance or skewness. Examples methods relying on this type of weights include include Hainmueller (2012), Chan et al. (2016), and Athey et al. (2018), among many others.

Covariate balancing weights bypass the need to explicitly estimate ![]() . Instead, they solve for weights that ensure the treated and control groups are balanced across predefined covariates or their transformations. This method aligns closely with the goal of causal inference: achieving balance. The main challenge is selecting the right set of covariates to be balanced. Variance estimation can also be more involved, although modern statistical packages take care of it.

. Instead, they solve for weights that ensure the treated and control groups are balanced across predefined covariates or their transformations. This method aligns closely with the goal of causal inference: achieving balance. The main challenge is selecting the right set of covariates to be balanced. Variance estimation can also be more involved, although modern statistical packages take care of it.

Software Packages: MatchIt, Matching.

Hybrid Approach

Imai and Ratkovic (2013) developed a hybrid method that combines elements of covariate balancing and inverse propensity score methods. Their method, known as Covariate Balancing Propensity Score (CBPS), aims to estimate propensity scores while simultaneously achieving covariate balance. Instead of relying on a tuning parameter, CBPS ensures balance by solving the following moment conditions:

![]()

Additionally, CBPS estimates ![]() by solving the standard likelihood score equation:

by solving the standard likelihood score equation:

![Rendered by QuickLaTeX.com \[\sum_{i} \left[ W_i - e(X_i; \gamma) \right] \frac{\partial e(X_i; \gamma)}{\partial \gamma} = 0.\]](https://yasenov.com/wp-content/ql-cache/quicklatex.com-407c94a866217876610d55a9ed07500f_l3.png)

The CBPS is operationalized in the Genralized Method of Moments (GMM) framework from the econmetrics literature. This approach ensures that the estimated propensity scores lead to balanced covariates, potentially improving the robustness of causal inference. CBPS can be implemented using available GMM software packages and is particularly useful when traditional propensity score models fail to achieve adequate balance.

Software Packages: CBPS.

An Example

Let’s illustrate these methods in practice with R. Consider a dataset with treatment ![]() , outcome

, outcome ![]() , and covariates

, and covariates ![]() . We estimate the ATE using both IPS, entropy balancing weights and CBPS. The exercise starts with generating some synthetic data.

. We estimate the ATE using both IPS, entropy balancing weights and CBPS. The exercise starts with generating some synthetic data.

rm(list=ls())

library(MASS)

library(WeightIt)

set.seed(1988)

n <- 1000

X1 <- rnorm(n)

X2 <- rnorm(n)

W <- rbinom(n, 1, plogis(0.5 * X1 - 0.25 * X2))

Y <- 3 + 2 * W + X1 + X2 + rnorm(n)

data = data.frame(Y, W, X1, X2)

We define functions that will calculate the weights and the associated Average Treatment Effects.

compute_weights <- function(method) {

weightit(W ~ X1 + X2, method = method, data = data)$weights

}

compute_ate <- function(weights) {

weighted.mean(Y[W == 1], weights = weights[W == 1]) -

weighted.mean(Y[W == 0], weights = weights[W == 0])

}Next, we calcualte the three types of estimates.

ips_weights <- compute_weights("glm")

ebal_weights <- compute_weights("ebal")

cbps_weights <- compute_weights("cbps")Finally, we estimate the average treatment effect and print the results.

ips_ate <- compute_ate(ips_weights)

ebal_ate <- compute_ate(ebal_weights)

cbps_ate <- compute_ate(cbps_weights)

cat("ATE (IPS Weights):", ips_ate, "\n")

>ATE (IPS Weights): 2.287048

cat("ATE (Entropy Balance Weights):", ebal_ate, "\n")

>ATE (Entropy Balance Weights): 2.287048

cat("ATE (CBPS Weights):", cbps_ate, "\n")

>ATE (CBPS Weights): 2.287048 The weights are all very highly correlated with each other (not shown above), so they yield nearly identical results. For simplicity, I have ignored variance estimation and confidence intervals.

You can download the code from this GitHub repo.

Where to Learn More

This article was inspired by Ben-Michael et al. (2021) and Chattopadhyay et al. (2020). Both references are great starting points. There are plenty of accessible materials on the topic online. My favorite is Imbens (2015). For more in-depth content turn to Imbens and Rubin (2015)‘s seminal textbook.

Bottom Line

- Covariate balance between the treatment and control groups is at the core of causal inference.

- There are two broad classes of weights that achieve such balance.

- IPS weights adjust for treatment probability but can be unstable.

- Covariate balancing weights directly target balance

, bypassing propensity score estimation.

, bypassing propensity score estimation.

References

Athey, S., Imbens, G. W., & Wager, S. (2018). Approximate residual balancing: debiased inference of average treatment effects in high dimensions. Journal of the Royal Statistical Society Series B: Statistical Methodology, 80(4), 597-623.

Ben-Michael, E., Feller, A., Hirshberg, D. A., & Zubizarreta, J. R. (2021). The balancing act in causal inference. arXiv preprint arXiv:2110.14831.

Chan, K. C. G., Yam, S. C. P., & Zhang, Z. (2016). Globally efficient non-parametric inference of average treatment effects by empirical balancing calibration weighting. Journal of the Royal Statistical Society Series B: Statistical Methodology, 78(3), 673-700.

Chattopadhyay, A., Hase, C. H., & Zubizarreta, J. R. (2020). Balancing vs modeling approaches to weighting in practice. Statistics in Medicine, 39(24), 3227-3254.

Hainmueller, J. (2012). Entropy balancing for causal effects: A multivariate reweighting method to produce balanced samples in observational studies. Political analysis, 20(1), 25-46.

Hirshberg, D. A., & Wager, S. (2018). Augmented minimax linear estimation for treatment and policy evaluation.

Imai, K., & Ratkovic, M. (2014). Covariate balancing propensity score. Journal of the Royal Statistical Society Series B: Statistical Methodology, 76(1), 243-263.

Imbens, G. W., & Rubin, D. B. (2015). Causal inference in statistics, social, and biomedical sciences. Cambridge university press.

Imbens, G. W. (2015). Matching methods in practice: Three examples. Journal of Human Resources, 50(2), 373-419.

Li, F., Morgan, K. L., & Zaslavsky, A. M. (2018). Balancing covariates via propensity score weighting. Journal of the American Statistical Association, 113(521), 390-400.

Rosenbaum, P. R., & Rubin, D. B. (1983). The central role of the propensity score in observational studies for causal effects. Biometrika, 70(1), 41-55.

Leave a Reply