Background

This is a mistake I make with data all the time. Even more, I have seen many well-known professors and data masters also fall for it. It goes like this.

I see a graph with two bars depicting two sample means placed right next to each other. The presenter, being a good data scientist, has added error bars representing 95% confidence intervals. The side-to-side placement usually implies that the two quantities are about to be compared. My eyes immediately check whether the two confidence intervals overlap. When they do not, I quickly conclude that the two means are statistically significantly different from each other and, thus, the presenter has uncovered an exciting truth about the world.

This naïve but all too common approach to judging statistical significance is wrong. Here is why.

The Basics of Confidence Intervals

Let’s set up a toy example following Schenker and Gentleman (2001). Imagine we have two quantities – ![]() and

and ![]() – and we are interested in testing whether they are statistically different from each other. These can stand for US and UK sales or user engagement on Android and iOS devices. For simplicity, we assume all friendly statistical properties (e.g., large and random samples, well-behaved distributions, consistent estimators for all quantities, etc.).

– and we are interested in testing whether they are statistically different from each other. These can stand for US and UK sales or user engagement on Android and iOS devices. For simplicity, we assume all friendly statistical properties (e.g., large and random samples, well-behaved distributions, consistent estimators for all quantities, etc.).

We are interested in whether the population values for ![]() and

and ![]() are equal. The null hypothesis (

are equal. The null hypothesis (![]() ) states that they indeed are:

) states that they indeed are:

(1) ![]()

We will denote our estimates of ![]() and

and ![]() with

with ![]() and

and ![]() and refer to their estimated standard errors as

and refer to their estimated standard errors as ![]() and

and ![]() . The corresponding confidence intervals for

. The corresponding confidence intervals for ![]() and

and ![]() are given by the following:

are given by the following:

(2) ![]()

and

(3) ![]()

So far, so good.

Importantly, note that we can also construct a confidence interval for the difference ![]() :

:

(4) ![]()

Now, let me describe the two approaches to determining statistical significance based on these intervals.

Two Ways to Determine Significance

The naïve method – the mistake I make all the time:

- Examine whether the two confidence intervals (for

and

and  ) overlap.

) overlap. - Reject the null hypothesis if they do, and do not reject it otherwise.

The correct method:

- Examine the confidence interval for the difference between the two quantities

.

. - Reject the null hypothesis if it does not contain 0, and do not reject it otherwise.

Diving Deeper

To understand why the naïve approach is wrong, let’s examine the length of these confidence intervals.

Under the naïve approach, the two confidence intervals overlap only if the following interval contains 0:

(5) ![]()

This is the equation on which the decision under the naïve approach is based.

Let’s compare this expression with the confidence interval for the difference ![]() on which the decision under the correct approach is based.

on which the decision under the correct approach is based.

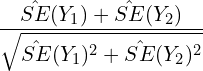

Their ratio is equal to:

(6)

It is easy to see that this ratio is greater than one. In other words, the confidence interval in play under the naïve approach is wider than that under the correct approach.

Hence, when the two quantities are equal to each other (i.e., ![]() is true), the naïve method is more conservative (rejects less often; under rejects). When they are different (

is true), the naïve method is more conservative (rejects less often; under rejects). When they are different (![]() is false), the naïve approach is less conservative (rejects too often; over rejects).

is false), the naïve approach is less conservative (rejects too often; over rejects).

The discrepancy between the two methods will be largest when the ratio above is large. This happens when ![]() and

and ![]() are of equal value. The opposite is true as well – when one of

are of equal value. The opposite is true as well – when one of ![]() and

and ![]() is much greater than the other, the ratio will roughly equal one, and hence the two methods will yield very similar results.

is much greater than the other, the ratio will roughly equal one, and hence the two methods will yield very similar results.

An Example

Schenker and Gentleman (2001) give a numerical example where ![]() and

and ![]() measure proportions. They set

measure proportions. They set ![]() ,

, ![]() ,

, ![]() .

.

In this case, the two confidence intervals for ![]() and

and ![]() are

are ![]() and

and ![]() , respectively. The two intervals overlap, so under the naïve approach, we conclude the two population proportions are not significantly different.

, respectively. The two intervals overlap, so under the naïve approach, we conclude the two population proportions are not significantly different.

However, the confidence interval for the difference ![]() is

is ![]() . Clearly, it does contain 0, and thus, we cannot conclude statistical significance under the correct approach.

. Clearly, it does contain 0, and thus, we cannot conclude statistical significance under the correct approach.

Bottom Line

- Examining overlap in confidence intervals is an intuitive and natural way to judge statistical significance between two quantities.

- While, in some cases, this naïve approach might give you the right answer, it is certainly not the correct way.

- Instead, remember to analyze the confidence interval for the difference between the two quantities.

Where to Learn More

Check the paper by Schenker and Gentleman (2001) on which this post is based. The authors go deeper into this question with simulations and discussions on Type 1 errors and power.

References

Cole, S. R., & Blair, R. C. (1999). Overlapping confidence intervals. Journal of the American Academy of Dermatology, 41(6), 1051-1052.

Schenker, N., & Gentleman, J. F. (2001). On judging the significance of differences by examining the overlap between confidence intervals. The American Statistician, 55(3), 182-186.

Leave a Reply